My techniques with Cloudflare

Coming from my homelab post, Cloudflare was the key part to expose my backend services. Their Zero Trust Tunnel makes it so easy to connect my host with a domain, and Load Balancer helps to provide availability and resilience to my setup. In this post, I want to cover some techniques with these two services.

Performance Issues

Back to this setup, I had the domains bought at Porkbun, name servers route to Cloudflare, Cloudflare Tunnels route to backend services, backend services hosted with Hyper-V, Proxmox Ubuntu Server VMs, all connected together with Tailscale overlay network. It is a combo of cheap, easy to use, easy to scale solution; but it's also fragmented with separate components owned by different entities.

The most common issue with fragmented (or decentralized) setup is performance; no matter how neat it is, there will always be a botteneck somewhere that drag me down. I want to explore some tips to fix performance issues first before getting into horizontal scaling solutions. And, with a multi components setup, performance issues come mostly from networking.

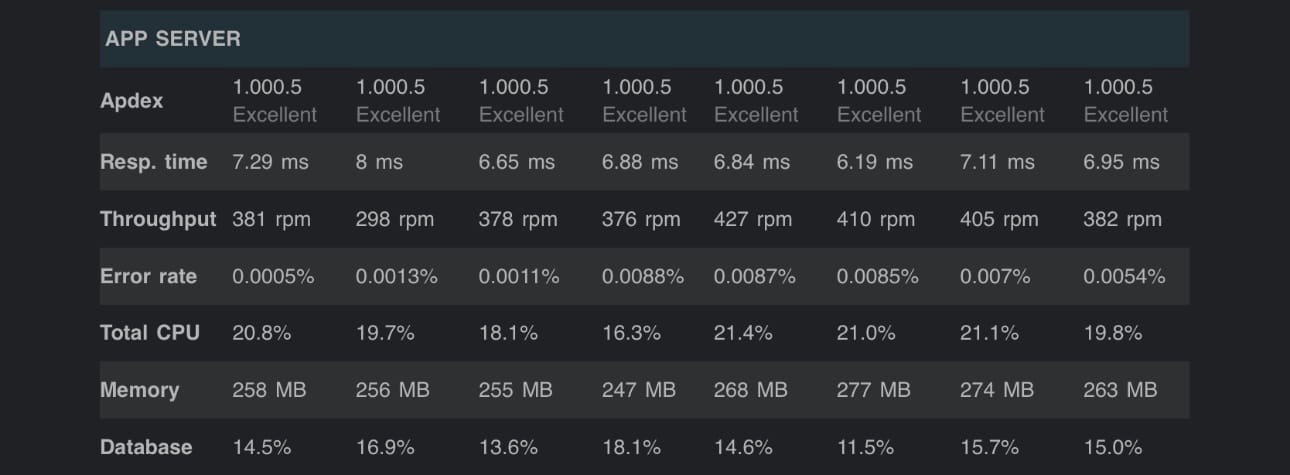

At the context of JustChill, its backend is a really legacy codebase with Rails 5 and Ruby 2.7, there are plans to migrate to Go/Rust frameworks but that requires a huge amount of engineering resources. Looking closer, I figured out that this system is ancient but it's not slow actually; with puma as the application server and some workers & threads fine-tunning, REST API requests can be handled with just a few milliseconds in average. Frontend side (mobile apps) is a different story, the API latency jumps to nearly a second, sometime a few seconds; this made it unfair to judge RoR as the bottleneck of this system, and brought me to solving network issues instead.

The first thing I figured out was the intermittent API speed, the same API come through sometime fast but some other times really slow; a quick research led me to the slow upload speed issue of Hyper-V Virtual Switch, disable Large Send Offload helped to improve stability. Another change was with my internet router, it has an anti-hacking feature to limit the maximum number of TCP or UDP connections to 100, increase it to 65000 will allow more connections during surging time. The last change I made was to create 3 replicas of Cloudflare Connector - deployed to 3 different computers, this makes sure Cloudflare Tunnel still have connection to my services if one connector goes into trouble.

Load Balancer and Failover

Another technique to improve the overall performance is to deploy backend services to different locations, and rely on Cloudflare DNS based Load Balancer to load balance API requests.

I used DNS Load Balancer to provide a failover scenario for my setup. Basically, I have another computer rig at my parents' house, which is a very compact one with limited computing resource. It was built in a way that, just plug in power and internet cable then all BE services are connected to Cloudflare within an independent Tunnel. Having 2 separate tunnels serving the same backend stack, I Use Cloudflare Load Balancer with Cloudflare Tunnel to set up my services behind Cloudflare Load Balancer.

With this upgrade, I provide another level of resilience to JustChill and FlashChat services; if my lab goes down, the failover trigger will steer traffic to my backup server while I'm working on the fixes. This may scale up to multi geolocation regions as well to improve network traveling.